If you frequently use image-to-video apps or AI models, you're likely familiar with various platforms such as Sora, Veo 3, Kling, and Hailuo AI. However, you might not have noticed that several leading AI generators are developed by Chinese teams. If you want to understand the best Chinese image-to-video AI generators and how to use them, this article is for you.

We focus not only on the differentiated innovations and practical commercial value of Chinese teams, but also on providing a user-friendly guide and practical creative case studies for you.

Why Chinese Image to Video AIs Are Popular?

The reason Chinese Image to Video AIs are widely searched, followed, and used is their ability to meet specific needs with significant advantages. They not only rival OpenAI products in key performance aspects such as video length and high-definition resolution, but also offer highly competitive cost-effectiveness, with generation costs as low as 4 cents per second—far lower than the pricing of Sora, Runway, and others. This significantly reduces the barrier to entry for creators.

Additionally, Chinese models tend to be more flexible. For example, while Sora doesn't widely offer real-person reference generation and has strict portrait rights restrictions, some Chinese open-source models adapt to global creation scenarios, and allow no filter AI video creation, and support 119 languages. This enables individual creators and enterprises to complete video production efficiently, attracting widespread attention from users worldwide.

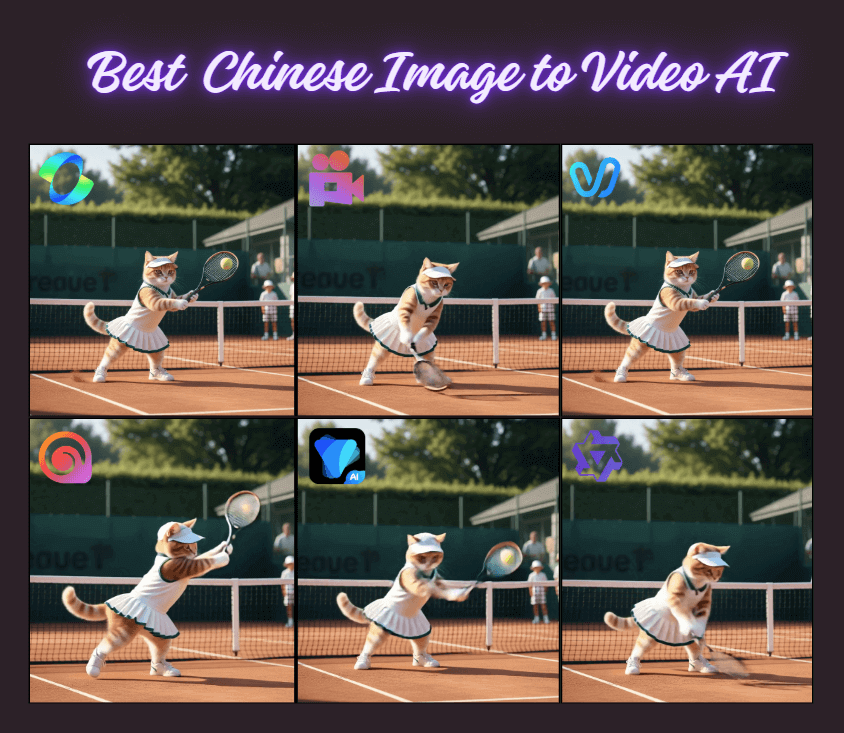

Best Chinese Image to Video AI

If you're choosing a Chinese image-to-video generator in 2026, prioritize three things: visual fidelity under motion (skin, fabric, lighting), shot-level control (lenses, camera moves, cuts), and your throughput-cost curve (how fast you get reliable clips at a price you can sustain).

Below we compare 6 leading models—what they're best for, why they're fast or slow, how they handle faces and typography, and where you'll hit limits—so you can match the right engine to your workflow and budget.

1Kling 2.5 Turbo

Kling 2.5 Turbo is definitely the best professional Chinese image-to-video AI. Given creation with 1080p cinematic quality, ultra-smooth motion, and exceptional prompt accuracy. It seamlessly translates complex multi-step instructions into coherent narratives, maintaining consistent colors, lighting, and style—even during high-speed action or dynamic camera movements. With faster inference speeds and 30% lower costs than previous versions, this model is a game-changer for marketers, YouTubers, and film studios. It enables scalable production of ads, explainer videos, and previsualizations, all while delivering studio-grade quality.

Though Kling recently launched the O1 model, it is still relatively new, and the cost can be quite steep for the average user. Therefore, I highly recommend Kling 2.5 Turbo as the best Kling model currently. It offers fast response times, significantly reduced costs, and excellent overall performance.

Features:

- Stable portrait/image-to-video with natural micro-expressions

- Smooth pan/tilt/dolly and usable slow motion with low jitter

- Strong vertical (9:16) defaults, auto-beat pacing, soundbed suggestions

- Quick drafts; predictable results across similar prompts

Pros

- Very fast throughput; low redo rate

- Consistent faces, hair, and fabric under motion

- Social-first framing that "just works"

Cons

- Limited granular lens/lighting control

- Narrative multi-shot stitching can feel templated

- Closed ecosystem; fewer advanced knobs

Best for: Brand Advertising / Film/TV Previsualization (Previs) / Long-form Video Production

2PixVerse V5.5

PixVerse V5.5 is an improvement over V5, that redefines generative media with its new Audio-Visual Sync Engine and Multi-Shot Architecture. It now generates crystal-clear 1080p clips in seconds, offering extended durations of up to 10 seconds per generation. The updated model significantly improves temporal coherence, virtually eliminating "drift" to keep characters and environments consistent across longer cuts. Its breakthrough multi-modal capabilities now include native audio generation—syncing dialogue, sound effects, and background music directly to the visuals. With the introduction of multi-clip generation, it can produce narrative-driven sequences with dynamic camera transitions in a single pass. Affordable and versatile, PixVerse V5.5 empowers creators, agencies, and filmmakers to produce complete, sound-synced viral shorts and storytelling content with unprecedented speed.

Features:

- Native Audio Generation: Auto-generates synchronized SFX, dialogue, and music matching the visual context.

- Multi-Clip & Shot Control: Generates dynamic cuts and camera transitions (wide to close-up) in one request.

- Extended Duration: Supports high-fidelity clips up to 10 seconds with consistent physics.

- Smart Prompt Optimization: "Thinking Type" parameter automatically enhances vague prompts for better fidelity.

Pros

- Exceptional character and scene consistency across multi-shot sequences

- Native sound integration removes the need for external post-production

- Drastically reduced "shimmer" and artifacting in complex movements

- Ultra-fast inference allows for rapid iteration of HD content

Cons

- Dialogue lip-sync precision can vary on complex scripts

- Requires detailed descriptive prompting to fully leverage the multi-shot capabilities

- Needs prompt finesse for strict realism

Best for: E-commerce Flash Ads / Narrative Storytelling / Music Videos / Viral Shorts with Sound

3Wan 2.5

If you follow the LLM space, you've likely heard of Qwen. It's Alibaba's flagship foundation model—similar to GPT-4 or Llama—designed for text generation and multimodal understanding (like analyzing images and audio).

But Alibaba also has a counterpart called Wan. While Qwen handles the 'brain' work of language and understanding, Wan is the 'creative eye', focusing purely on visual generation. Think of Wan as their answer to Sora: it specializes in video creation, editing, and transforming text or images into video. Together, Qwen and Wan form a complete ecosystem covering both intelligence and creation.

Wan 2.5-Preview sets a new standard for cinematic image-to-video generation with synchronized audio and exceptional quality. It produces 10-second 1080p, 24fps videos with dynamic camera movements, atmospheric effects, and precise audio matching. Its advanced prompt understanding handles complex instructions, making it ideal for product showcases, architectural walkthroughs, and creative prototyping. Additionally, it supports image editing and bilingual prompts (Chinese/English), making it accessible to global users. This preview model highlights Alibaba's cutting-edge AI capabilities, bridging innovation with practical applications for business and creative projects.

Features:

- Explicit shot/lens/lighting parameters; consistent framing on demand

- Clear Chinese/English text within the scene with low shimmer

- Research/enterprise trajectory and ecosystem support

- Image conditioning plus detailed camera directives

Pros

- Best path to "describe it, get it" for cinematography

- Strong signage/UI rendering for product and OOH scenes

- Suits labs and enterprise integrations

Cons

- Preview artifacts and evolving quotas/UI

- Requires prompt engineering to hit top quality

Best for: Film Previsualization (Previs) / E-commerce 3D Showcases / Enterprise Creative Prototypes

4Hailuo 2.3

Hailuo 2.3 specializes in stylized image-to-video content, excelling in anime, ink wash, and game CG styles with remarkable consistency. Its enhanced physical simulation brings complex movements—like dance and martial arts—to life with realistic fluidity. Additionally, it captures human microexpressions and emotional transitions, making it ideal for character-driven narratives. With cost-effective pricing that lowers bulk production costs by 50%, it's perfect for artists, marketers, and creators looking to bring their illustrations, ads, or personal projects to life in a highly artistic yet affordable way.

Features:

- Intuitive interface, including access via web, mobile apps, and open API

- Natural blinks and expressions; strong identity retention

- Aspect ratio presets and soundtrack suggestions

- Camera cues that work out of the box

- Minimal setup for social-ready clips

Pros

- Quick to "good enough" quality

- Great for talking-heads and portrait-led promos

- Low friction for non-experts

Cons

- Limited granular lens/lighting adjustments

- In-scene complex typography can shimmer

Best for: Animation Production / Game CG & Cinematics / Martial Arts & Dance Content

5Seedance 1.0

Seedance 1.0 is from ByteDance's "Seed" research team. It is good for social media and narrative content, delivering smooth image-to-video generation in 1080p with synchronized audio. It offers two modes: Lite for fast, high-quality short clips (5-10 seconds) and Pro for multi-character interactions and advanced storytelling. Its native audio generation adds background music and sound effects, while vertical screen support is optimized for TikTok, Reels, and YouTube Shorts. Trusted by creators and marketers, it turns static images into engaging videos with commercial usage rights, making it ideal for professional projects.

Features:

- Beat-matching and hook-optimized framing for TikTok/Douyin

- Face handling tuned for social aesthetics

- Team libraries, presets, and fast variant iteration

- Workflow designed for high-volume campaign testing

Pros

- Highest probability of post-ready clips with minimal edits

- Excellent for rapid A/B testing and scaling

- Metrics-aware defaults (timing, cropping, rhythm)

Cons

- Guardrails limit extreme or niche aesthetics

- Potential ecosystem lock-in; fewer deep controls

Best for: Social Media Skits & Dramas / Product Demos / Educational Content

6Vidu Q2

Vidu Q2 transforms static images into emotionally resonant, film-like videos with nuanced storytelling and "AI acting." It captures microexpressions like trembling lips and thoughtful glances, while executing fluid camera moves and dynamic multi-character interactions—even in intense action scenes. Offering two modes—Turbo for fast 20-second 1080p videos and Pro for high-fidelity cinematic detail—it caters to both quick social media content and detailed creative projects. With sharp semantic understanding, it turns simple prompts into vivid, stable videos that captivate audiences with authentic emotion and visual impact.

Features:

- Director-like sequences with native cuts and lens persistence

- Good low-light handling, reflections, and volumetrics

- Robust cultural aesthetics (period pieces, traditional styles)

- Shot/camera directives for structured storytelling

Pros

- Most "film-like" continuity at this tier

- Reliable motion physics and scene carryover

- Great for teasers, narrative ads, research prototypes

Cons

- Higher credit consumption for multi-beat sequences

- Steeper learning curve for shot-level prompting

Best for: Short Video Platforms (TikTok/Reels) / Narrative Short Films / Emotional Ad Campaigns

7Quick Comparison

| Model | Developer | Access Levels | Cost Ratio ($/sec) | Speed | Resolution | Notes |

|---|---|---|---|---|---|---|

| Kling 2.5 Turbo | Kuaishou Technology | Medium | 0.07 | 3–5 min / 5–10s | 1080P | Cost via API is lower than credit-based systems. A previous 5-second 1080p was 35 credits. |

| PixVerse V5.5 | Aishi Technology | Easy | 0.09~0.24 | <2 min/clip | 1080P | Offers multiple resolution options (360p to 1080p). |

| Wan 2.5-Preview | Alibaba | Hard | 0.1875 | 3–5 min/10s | 1080P | Open-source model from Alibaba. |

| Hailuo 2.3 | MiniMax | Easy | 0.117 | 3–5 min/clip | 1080P | Higher-priced unlimited and subscription plans are available. |

| Seedance 1.0 | Bytedance | Hard | 0.89 | 41.4 sec/5s | 1080P | Capable of fast 1080p generation. |

| Vidu Q2 | Shengshu Technology | Medium | 0.003 | In seconds | 1080P | Offers high-quality, complex motion and emotional expression. |

How to Use Chinese Image to Video AI?

Most Chinese image-to-video tools follow a simple three-step workflow: upload your source image, enter your text prompt (in English), and generate your video.

For creators working across multiple platforms or producing high volumes of content, managing several browser tabs can slow you down. That's where integration solutions become valuable. Tools like Novi AI bring multiple AI video models—including Kling, Pixverse, Vidu, and Veo—into a single online environment, letting you test different engines without switching contexts. You can even apply Life Like Realistic Veo 3 Prompts to compare high-end Western outputs against these Chinese alternatives.

A great video requires more than just a great clip. You need to research ideas, write compelling scripts, and maybe even translate content for a global audience. This is where Novi AI evolves from a simple video tool into your all-in-one creative partner.

More Features from Novi AI

- One-Click Script-to-Long-Video: Turn your script into a multi-scene long-form video in one click—complete with subtitles and natural voiceover, ready to publish.

- Multi-Shot Storyboarding: Automatically split your script into structured shots with scene descriptions, pacing, and transitions—so your video stays coherent from start to finish.

- More Creation Support: Flexible image and video models designed for all-in-one generation—ready to use. Try Nano Banana prompts to create kids' photography directly on this platform.

Popular Use Cases

Once you've chosen your model, here are three proven content strategies that creators are using to generate revenue and grow their audiences:

AI-generated fight videos: The use of advanced Chinese AI video generation tools (like Hailuo AI or Cling AI) to create short, dynamic, and often humorous video clips of characters or user-uploaded photos in martial arts or combat scenarios.

AI Pet Sitcoms: Pet owners upload photos of their cats or dogs and generate anthropomorphic videos—cats cooking breakfast, dogs running farms. These wholesome, shareable clips attract massive engagement on TikTok and Instagram, driving revenue through platform monetization programs.

Healing Meditation Videos: Creators upload landscape photos (beaches, forests, mountains) to generate 10-minute ambient videos paired with soothing music. These "sleep-aid white noise" or "stress-relief meditation" videos posted to YouTube can earn passive income through the YouTube Partner Program. Because viewers return to them repeatedly, a single meditation video can generate 10x the long-term revenue of a typical short-form clip.

FAQs

1Do I need to know Chinese to use Chinese image-to-video tools?

No. All mainstream Chinese AI video tools support English prompts and offer English interface options. The workflow is identical to other image-to-video platforms you may have used: upload image → enter prompt → generate video. Device requirements are minimal—most tools run in your browser without special hardware.

2Is Chinese image-to-video AI worth paying for?

Chinese image-to-video tools typically offer competitive pricing, making them ideal for experimentation. If you're producing content regularly, paid tiers unlock faster generation speeds and higher resolution outputs. If platform-hopping becomes tedious aggregators like Novi AI let you trial multiple models from one place before committing to a subscription.

Conclusion

Chinese image-to-video AI has matured rapidly, offering professional-grade results at accessible price points. Whether you're creating social content, marketing materials, or film previsualization, the key is matching the right model to your specific needs—motion fidelity for character work, prompt accuracy for complex scenes, or cost efficiency for high-volume production. The tools are here; the only limit is your creativity.

Was this page helpful?

Trustpilot Rating 4.8

Trustpilot Rating 4.8